- MindByte Weekly Pulse: Quick GitHub, Azure, & .NET Updates

- Posts

- Building Async and Cloud Native organizations - Issue #31

Building Async and Cloud Native organizations - Issue #31

Demystifying Coding: Harnessing APIs, Advancing Accessibility, and Deciphering REST

Welcome to my weekly newsletter! Every week, I bring you the latest news, updates, and resources from the world of coding and architecture. Thank you for joining me, and happy reading!

REST and APIs

If you use ChatGPT, then you might know that there is a concept of web browsing and Plugins. With browsing, it will fetch a page and use it as its source, but it does not have a lot of context. Want to have more structure, then a plugin is a great way to go.

But what if you have an API with already structured endpoints, then it would be interesting to query that directly. Using some clever tricks, you can instruct ChatGPT to call any API and use the data. See the below video for a walkthrough:

Last week’s edition contained a link to the OWASP top 10 API vulnerabilities. If you want to play with those and see if you can detect them, then this repository is a great start:

On their blog, you find some examples.

REST must be the most broadly misused technical term in computer programming history. I can’t think of anything else that comes close.

Today, when someone uses the term REST, they are nearly always discussing a JSON-based API using HTTP.

When you see a job post mentioning REST or a company discussing REST Guidelines they will rarely mention either hypertext or hypermedia: they will instead mention JSON, GraphQL(!) and the like.

Only a few obstinate folks grumble: but these JSON APIs aren’t RESTful!

Read more for a brief, incomplete and mostly wrong history of REST, and how we got to a place where its meaning has been nearly perfectly inverted to mean what REST was original contrasted with: RPC.

Coding technicalities

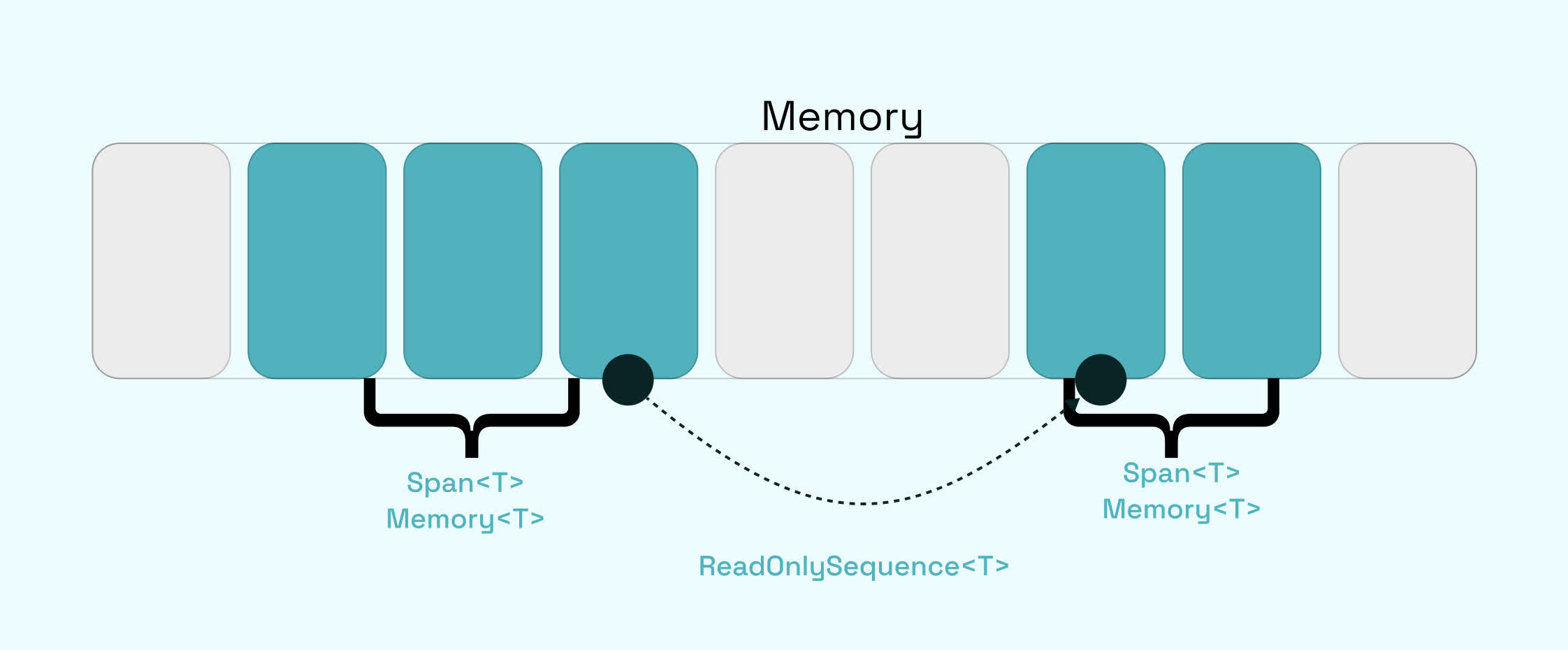

I do not think I ever used these types that Steven Giesel describes, but that is more my limited knowledge of those. If you face the same, then dive into these memory specific types:

You would think that to run a service like ChatGPT, you would need an enormous data center; however, you can run the lighter Large Language Models on your own machine as well. It might not be that fast, but it gives you more control over the data. Maarten explains how to use the C# library to make a simple console app with an LLM.

Wolverine is an opinionated mediator and service bus solution using modern .NET technology. So what is it using under the table; see how it adds logging, correlation and error handling. How it operates the outbox pattern and finds the dependencies:

GitHub related

Simple thing, but useful when doing things like KanBan; having a work-in-progress limiter is now possible in GitHub Projects. Each column in your Project board can have a limit of the number of items allowed.

It won’t stop you from adding items in that column, but it at least warns you that you have too many items.

Next to some other nice UI improvements, there is also the export to CSV option. People like to work in Excel :-)

GitHub makes it very easy to add images to an issue, pull request, or discussion item. Just do a copy paste and it will auto upload the image and add the relevant tags so it is shown directly as an image.

The drawback is that you most likely do not spend time thinking about people who are unable to see the images and might be using a screen reader. Adding an alt text (short for alternative text) helps people to understand what the image is about.

Easy to forget, but luckily you can automate almost everything with GitHub Actions. The below article shows how to use a workflow that responds to issues, comments, pull requests, and analyses if an image has an alt text. If not, it will add a comment and asks the creator to annotate the image.

A great way to improve accessibility!

GitHub's Copilot, powered by OpenAI’s Codex, is radically changing the way coding works. This AI tool, accessible by simply pressing the tab key, enables developers to finish lines of code, generate blocks, or even write entire programs. Over 10,000 organizations and 30,000 Microsoft employees are already using Copilot to boost their productivity. Thomas Dohmke, CEO of GitHub, envisions a future where developers will increasingly need to manage large, complex systems, breaking them down into small building blocks where AI can assist in synthesizing the code. Despite AI's growing involvement, Dohmke stresses that the developer will remain the expert, maintaining oversight of the code and its security.

The implementation of Copilot has allowed developers to use their limited coding hours more efficiently, reducing distractions and keeping them in their creative zone. Dohmke also shares his excitement about Copilot's impressive user satisfaction and retention rates, and the fact that nearly half the code in files where Copilot is enabled is written by the AI tool itself. Moreover, a study showed that developers using Copilot were 55% faster at building a web server than those without it, and they had a higher success rate, suggesting that Copilot could significantly transform the industry.

Dohmke foresees that Copilot could also revolutionize learning and teaching, making the learning process more democratic and exploratory, especially in software development. However, he points out the necessity of a baseline knowledge in computer science to effectively leverage AI assistance. Lastly, he emphasizes the crucial role of open-source projects in the evolution of AI tools like Copilot, asserting that they are fundamental to human progress.

Computing in general

With almost every software product I help build, there is a need for reporting. And there are so many solutions for this, but nowadays, we normally pick PowerBI. As I try to do everything as a software product, I also try to apply this to reports, like a pipeline, source control, pull requests etc. This was very hard with PowerBI, but it looks like there will be an interesting option: Power BI Desktop Developer Mode.

Still in preview, but it allows you to store your work as project files, where project, report, and dataset definitions are saved as individual plain text files in a simple, intuitive folder structure.

Allowing you to do all the developer like activities you are used to.

Are you using Bicep for your infra deployments? Then you still needed to use those large json parameters files. Not that strange, as Bicep compiles to ARM in the end, but it always looked a bit strange not to have a better alternative.

Well, now there is: Bicep Parameter files. Besides a shorter and more Bicep specific structure is used, you can also refer to the bicep files that it provides the parameters for. This gives you design time IntelliSense and validation of the values.

Read more about the various possibilities:

Helpers and Utilities

In a previous edition, I shared a site to check if your website is green, but what about your dependencies, like fonts, scripts, images etc. Are they served from a green hoster, use proper caching and what would the CO2 emission be in the end.

I hope you've enjoyed this week's issue of my newsletter. If you found it useful, I invite you to share it with your friends and colleagues. And if you're not already a subscriber, be sure to sign up to receive future issues.

Next week, I'll be back with more articles, tutorials, and resources to help you stay up-to-date on the latest developments in coding and architecture. In the meantime, keep learning and growing, and happy coding!

Best regards, Michiel

Reply